Introduction:

In general, Optimisation is the process of finding the best possible solution or outcome from a set of processes to attain a certain objective function by considering various factors that are subject to constraints to control the solution's outcomes.

In mathematical terms, we could define this subprocess as an optimisation that “involves selecting the best combination of parameters for decision variables to achieve the most favourable result for a given problem statement,” such as minimising costs or maximising profits.

Is Optimisation mandatory for Optimising “Machine Learning (ML)"?

Answer: The straight answer is “YES".

This article will discuss how optimisation plays a vital role in the ML life cycle and how to achieve the objective by analysing ML models and selecting the suitable feature(s) for a given problem statement.

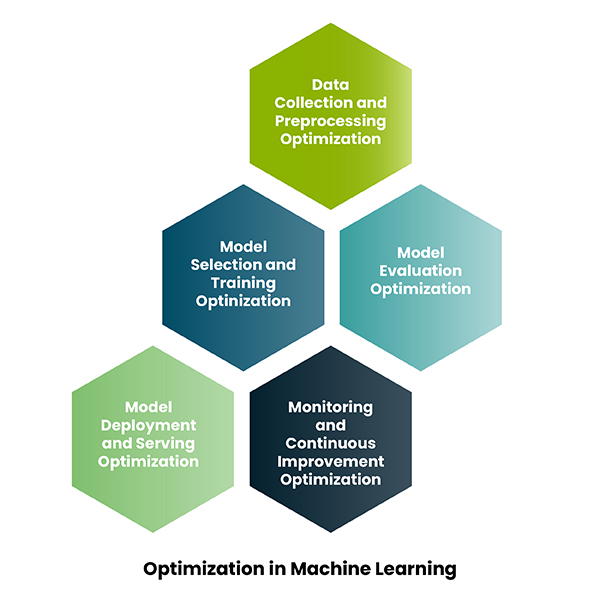

Optimisation can be applied at different stages in the Machine Learning lifecycle, from data preparation to model deployment. Multiple optimisation techniques are essential to improving model performance, ensuring efficiency, and achieving reliable, high-quality results.

Optimisation in Machine Learning

Optimisation can be done in the real world using the trial-and-error method (manual code change), whereby you keep introducing the appropriate techniques based on the nature of the objective and model selected for the problem statement. This process is a time-consuming but effective way to achieve feature optimisation, and another method is through automated feature selection techniques, which significantly improve model performance by reducing the difference between predicted and actual values.

Of Course! We have various Optimisation across specific to each stage of the ML life cycle.

Dаta Collection and Preprocessing Optimisation:

Data collection and preprocessing are the key stages next to business problem identification, which involves gathering and preparing data. Initially, the data is collected from reliable sources that are directly connected to the problem statement, such as APIs, web scraping, etc.

Next, data cleaning or cleaning is performed, which includes removing duplicates, handling missing values, and resolving any inconsistencies among the data to improve data quality and consistency.

The data is transformed into normalised and standard formats at this stage, making it uniform and ready for the next stage, such as data analysis.

Yes! Feature engineering is also applied to the dataset, such as creating new, relevant features and merging features from existing datasets to enhance the model’s predictive power.

Data Collection and Preprocessing Optimisation includes

Dаta Sampling and Dimensionality Reduction:

- Principal Component Analysis (PCA) helps reduce the dimensionality of data in terms of features and improves overall model interpretability.

- Feature Selection: It helps to avoid overfitting and reduces model complexity by removing redundant or unimportant features in the dataset.

Dаta Cleaning and Imputation:

- Automated Outlier Detection methods help to streamline data cleaning and prevent data quality issues during the model building.

- Imputation Techniques: It ensures that missing values are handled accurately and efficiently to keep the dаtaset healthy, especially with large dаtasets. Using the methods like K-Nearest Neighbours and Multiple Imputation by Chained Equations (MICE)

- Encoding Optimisation: This is a very effective method of choosing the correct encoding type based on the feature type can optimise model performance and training time such as One-Hot Encoding

Model Selection and Training Optimisation:

Model selection and training are critical phases in the machine learning life cycle. They involve choosing suitable models from possible models specific to the problem statement, tuning its parameters for different scenarios, which is a significant task, and training the model to achieve optimal performance and achieve the expected approximated accuracy in the results.

Model Selection and Training Optimisation includes

• Hyperparameter Tuning: Proper tuning can significantly improve a model’s accuracy, speed, and generalisability. “Hyperparameter Tuning” is a critical step in the ML life workflow, where the goal is to find the optimal set of hyperparameters for a model. Hyperparameters are external to the model and, unlike parameters learned from dаta during training, they influence both the training process and model performance.

- Grid Search and Random Search optimise hyperparameters by exploring different parameter values along with the model.

Optimising Loss Functions: Customising loss functions to suit specific problems, especially for imbalanced dаta to optimises model learning.

Regularisation: L1 (Lasso) and L2 (Ridge) Regularisation help to control overfitting and simplify models and highly recommended

Gradient Descent Optimisation: GD or Stochastic Gradient Descent (SGD) with mini batches improves the convergence speed for large dаtasets.

Model Evaluation Optimisation:

After the Model selection process, we must take care of model evaluation, a crucial phase in the ML life cycle. Even this step also requires optimisation without any doubt. It helps assess and improve a model's effectiveness, accuracy, and robustness before deploying the model into the production environment. The main objective is to evaluate the metrics to quantify how well a model performs on unseen data before the model goes into production, so that it helps to identify the best model, understand its strengths and weaknesses, and ensure it generalises well to new data.

Model Evaluation Optimisation includes

- K-Cross-Validation Techniques: In K-Fold Cross-Validation, the dаtaset is divided into “k” equally sised "folds", followed by this the model is trained on k-1 folds and validated on the remaining fold completion. This process is repeated “k” times, with each fold used once as a validation set. The average of the evaluation metrics from each fold is used as the final performance measure, in this validation process the typical values for k: 5 or 10.

- Other Optimisation techniques are Leave-One-Out Cross-Validation (LOOCV) and Time Series Cross-Validation optimise for time-series dаta (or) small dаtasets respectively.

- Bias-Variance Trade-off Optimisation: Balance bias and variance, improving model robustness and accuracy such as Ensemble Methods - Bagging, Boosting, and Stacking.

Model Evaluation follows Model Deployment and Serving Optimisation. These are the critical stages to completing model building and moving into production. Model deployment is the process of integrating a machine learning model into a production environment, where it can serve real users or applications. Model serving involves making the deployed model available for predictions to fulfil the objective of the problem statement.

Optimisation is a bit different at this stage since we have control over Data Pipeline Optimisation, Data Security and Compliance, CI/CD, Infrastructure, Frameworks, Scaling, Load Balancing, Caching, Latency Reduction, Monitoring and Health Checks, not limited to model and data level as we discussed earlier.

Deep dive into Feature Engineering

Feature Engineering is a critical step in ML, where raw data is transformed into a format more suitable for modelling. Effective feature engineering can significantly improve a model's performance by providing it with relevant, well-structured information.

Here are the critical questions about feature engineering:

4W-1H -Feature Engineering

What is Feature Engineering?

- It is one of the significant processes in the Data Science/Machine Learning life cycle. Here, we’re transforming the given data into a reasonable form that is easier to interpret.

- Making data more transparent to help the Machine Learning Model

- Creating new features to enhance the model.

Why Feature Engineering?

- NUMBER OF FEATURES could significantly impact the model considerably, so feature engineering is essential in the Data Science life cycle.

- Certainly, FE is IMPROVING THE PERFORMANCE of machine learning models.

When and Where is Feature Engineering?

- When there are many features in a given dataset, feature engineering can become challenging and exciting.

- Several features could significantly impact the model, so feature engineering is essential in the Data Science life cycle.

The feature engineering is a broad class that includes the following subdivisions, which will be applied to the ML life cycle at various stages.

- Feature Improvements

- Feature Selection

- Feature Extraction

- Feature Construction

- Feature Transformations

- Feature Learning

Feature Selection

This is one of the major processes in feature engineering, and it is a process of selecting a significant feature which helps to identify the dependent variables or predictors or fields or attributes from the original set of features in the given dataset or processed dataset for solving the problem statement. It is an essential part of the feature engineering process, including Feature Selection and Feature Extraction. The former focuses on choosing a subset of the existing features, and the latter generates new features. The main objective of feature selection is to improve the three primary factors: model performance, reduce computational cost, and enhance interpretability by removing redundant, irrelevant, or noisy data from the dataset and reducing overfitting , thereby improving model accuracy and efficiency.

Why Feature Selection is Important:

We have already discussed various optimisations in the ML workflow. In feature engineering, feature selection plays a significant role. Let’s review its importance.

- Improves Model Performance: Selecting the most relevant features/fields/attributes reduces overfitting, especially in cases with high dimensionality, which leads to better generalisation on unseen data.

- Reduced Training Time: Of course, a smaller subset of features reduces the high computational efforts and complexity among the feature correlations and speeds up the training process during the model's training.

- Enhances Model Interpretability: Fewer features and their correlations understanding make the model easier to understand and interpret by the ML engineers and model effectively; this helps during the explainability aspects.

- Prevents Overfitting: Irrelevant features add noise and unnecessary results because we couldn’t understand their impact in training and testing. It might take lots of effort and cost to achieve the expected results until we reach the appropriate results for answering the problem statements; otherwise, the model will learn patterns that don’t generalise well to new data.

Feature selection is crucial in the ML workflow, especially when dealing with high-dimensional datasets. We have discussed how it influences model performance, reduces computational costs, and makes models more interpretable.

By choosing the right feature selection method based on the given dаtaset and selected model, ML engineers can improve the overall effectiveness of their predictive models.

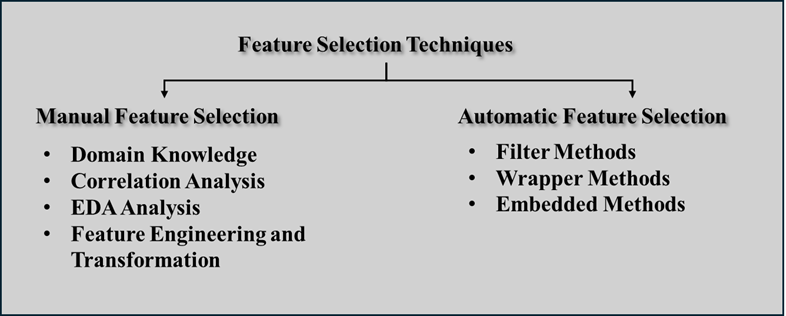

Feature Selection Techniques: This can be implemented by Manual or Auto (Libraries, Tools, Products).

Let's go through the Manual Feature Selection

Manual Feature Selection: In manual feature selection, the dаta scientist or subject matter expert (SME) evaluates features based on their understanding of the dаta and experience, the domain, and detailed study of insights from exploratory dаta analysis (EDA). The process is intuitive and relies on the expert's judgement rather than automated algorithms. This is a time consuming and costly process.

Manual Feature Selection approaches

- Domain Knowledge: Select features based on domain expertise and understanding of which features are likely to have the most impact on the target variable by selection process.

- Correlation Analysis: Use statistical methods to check correlation between features to understand how they are correlated with each other. High correlation between features indicates redundancy, so only one feature from such pairs may be kept for further analysis

- EDA Analysis: Under EDA process we can evaluate each feature(s) either individually or group wise for its relevance to the target. For example, visualising feature histograms, bar plots, heatmaps and pair plot distributions across target classes can reveal relationships and patterns, helping to identify relevant and irrelevant features. Such as univariate, bivariate and multi-variate.

- Feature Engineering and Transformation: Manually engineer new features or transform existing ones based on insights gained from the dаta.

Pros and Cons of Manual Feature Selection

Pros:

- Leverages domain knowledge, leading to more interpretable models and quicker analysis and get into the further analysis

- Fit to the dаtaset is small or straightforward, and automated methods may be overkill and additional efforts

Cons:

- Time-consuming, especially for large dаtasets and if the SMEs do not feel that the dаtaset is compatible for analysis and come to a conclusion.

- Prone to human bias, as decisions may rely on subjective judgement if the analysis is not taken care of in the right direction, so the team might miss subtle patterns or relationships.

Automatic Feature Selection

Automatic feature selection uses typically coded algorithms to choose the best subset of features. These methods can handle large datasets with high-dimensional data collection and are faster, with less time consumption and more objective results than manual selection.

Automated methods can be classified into Filter, Wrapper, and Embedded Methods.

Types of Feature Selection Techniques:

Automatic feature selection methods can be broadly categorised into three types:

Filter Methods, Wrapper Methods, and Embedded Methods.

Filter Methods: This is a straight examination of the feature's statistical relationship with the target variable, independent of any algorithm selected for the model building using the relevance of features in the dаtaset. The following is a list of those statistical methods. Filter methods are generally fast and computationally inexpensive.

- Correlation Coefficient: It measures the correlation between each feature and the target in the given dаtaset. During the feature selection process, the features with low correlation can be dropped or removed.

- Mutual Information: It measures the dependency between each feature and the target in the given dаtaset. During the feature selection process, the high mutual information suggests whether the feature is relevant or not.

- Chi-Squared Test: This is mainly used for categorical dаta from the dаtaset, this test evaluates whether the observed frequency of features is different from the expected frequencies such as collection of colours, city names, designations, etc.,

- Variance Threshold: This is a straightforward method that removes features with very low variance from the dаtaset; by assuming they don’t carry useful information for consideration for further analysis.

Wrapper Methods: Wrapper methods evaluate different subsets of features by training a model on each subset and assessing performance and recording the observations and this is better than filter methods, as it is more computationally intensive in nature. At the same time, it can capture feature interactions in a straight way. It is more accurate but computationally intensive, especially with large dаtasets.

- Forward Selection: This method is something interesting and starts with an empty model and keeps adding features one by one, based on which feature improves the model accuracy the most at each step carry forward until team satisfied the model out comes.

- Backward Elimination: This method is the reverse process of forward selection, and it starts with all features from the dаtaset and keeps removing them one by one and monitoring the accuracy, removing the least significant feature at each step and stop the process.

- Recursive Feature Elimination (RFE): In this method we will take care of training the model with all features and iteratively remove the least important features based on model performance and share the list. This is often used with algorithms that provide feature importance, like decision trees.

Embedded Methods: This method relies on the algorithm and works along with that and its built-in characteristics and capabilities. Simply add the penalty proportion to the model and access the outcomes. These methods are less computationally intensive than other methods and offer a good balance between performance and efficiency perspective.

- Lasso Regression (L1 Regularisation): It adds a penalty proportional to the absolute value of feature coefficients, making the less important feature coefficients into zero and followed by effectively removing them mathematically.

- Ridge Regression (L2 Regularisation): Similar to L1, it adds a penalty proportional to the square of feature coefficients, which reduces coefficients of less essential features.

Pros and Cons of Automatic Feature Selection

Pros:

- It's a major objective, especially with large dаtasets, and this is very systematic and efficient,

- Time consumption: Obviously, it reduces the model training time and computational complexity by selecting only the relevant features which are required for analysis.

Cons:

- It some situations the computationally expensive when dealing with high-dimensional dаta.

- Chance for lack of interpretability and challenge during explainability since the features are selected based on statistical metrics.

- It absolutely requires knowledge of the appropriate technique that is fit for the problem statement specific to the dаtaset and model.

Conclusion

We have discussed the Optimisation essentials and how to achieve the same in Machine Learning life cycle, by finding the most efficient set of parameters to achieve specific objectives, such as minimising errors or maximising accuracy. We understand that in the Machine Learning process, Optimisation plays a critical role across various stages, from dаta preprocessing and model selection to deployment and serving. Explored different Optimisation techniques to enhance the model's performance, reduce computational costs, and streamline processes. These techniques, whether in manual adjustments or automated feature selection, improve overall model accuracy by minimising the gap between predicted and actual values. Consequently, each phase of the machine learning lifecycle can benefit from Optimisation, which helps ensure that models are both robust and scalable for real-world applications.

Here are the key takeaways from this article:

- Importance of Optimisation in ML: How Optimisation is critical for improving model performance, enhancing accuracy, and minimising costs, making it essential at various stages of the machine learning lifecycle.

- Optimisation in ML Stages: What are the ML life cycle and their respective Optimisation techniques involvements.

- Dаta Collection and Preprocessing: Effective dаta preprocessing optimises dаta quality and structure, reducing noise and improving model input.

- Model Selection and Training: Techniques like hyperparameter tuning, regularisation, and gradient descent are vital for optimising model training.

- Model Evaluation: Cross-validation and bias-variance trade-offs help in fine-tuning models and ensuring robustness on unseen dаta.

- Deployment and Serving: Optimisation ensures efficient model deployment, reliable performance, and scalability in production environments.

- Feature Engineering:

- Feature Selection vs. Feature Extraction: Feature selection involves choosing the most relevant features from the dаtaset, while feature extraction creates new features, both improving model interpretability and performance.

- Manual vs. Automatic Selection: Manual feature selection leverages domain expertise, whereas automated selection uses algorithms to systematically select features, each approach offering unique benefits.

- Benefits of Feature Selection: Reduces overfitting, enhances model interpretability, lowers computational costs, and speeds up training by focusing only on relevant dаta.

- Feature Selection Techniques:

- Filter Methods: Use statistical measures to rank features by relevance (e.g., correlation, mutual information).

- Wrapper Methods: Evaluate feature subsets through model performance (e.g., forward selection, backward elimination).

- Embedded Methods: Integrate selection directly within the model (e.g., Lasso, Ridge Regression).

- Practical Approaches to Optimisation: Trial-and-error and automated tuning are practical Optimisation approaches that can be tailored to specific model objectives and dаtasets.

- Optimisation and Real-World Impact: Each stage’s Optimisation ensures that models are more effective, scalable, and reliable in real-world applications, addressing practical business objectives such as cost reduction and operational efficiency.

.jpg)

interesting blog thank you.

Thank you

This blog was super helpful and on point, thank you so much. I'm reading all the other blogs as well. Thanks.

Thank you